Here's how OpenAI Token count is computed in Tiktokenizer - Part 1

In this article, we will review how OpenAI token count is computed in tiktokenizer. We will look at:

-

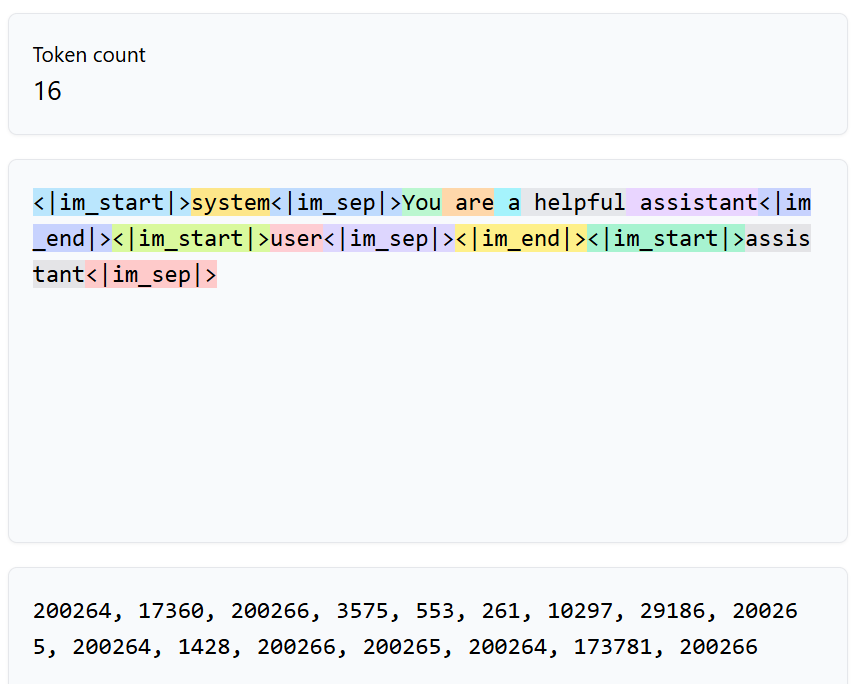

TokenViewer component

-

tokenizer

-

createTokenizer function

TokenViewer component

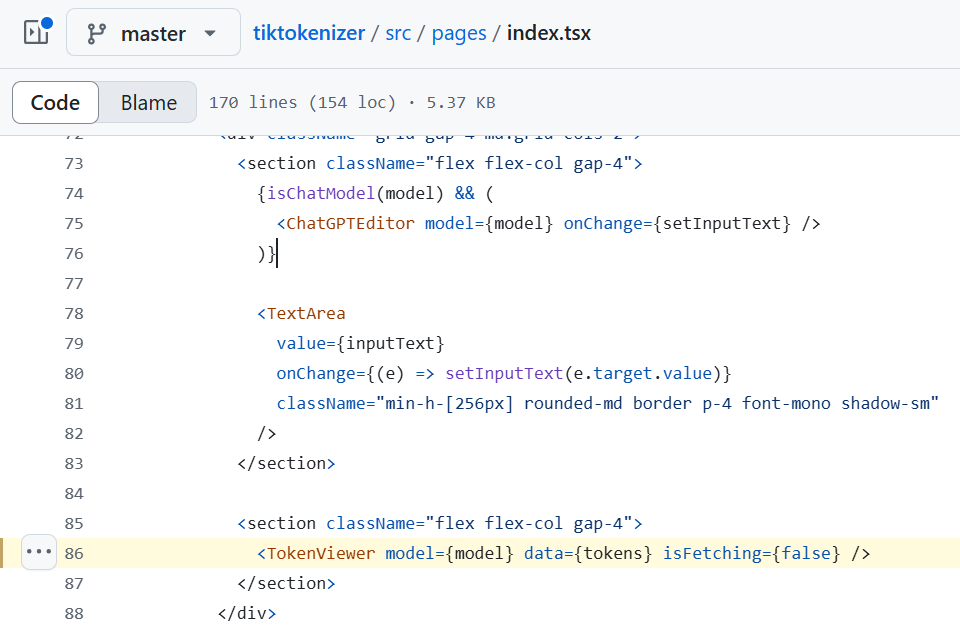

In tiktokenizer/src/pages/index.ts, at line 86, you will find the following code

<section className="flex flex-col gap-4"> <TokenViewer model={model} data={tokens} isFetching={false} /> </section>

And TokenViewer is imported as shown below

import { TokenViewer } from "~/sections/TokenViewer";

This component has three props

-

model

-

data

-

isFetching

So how does this TokenViewer component look like?

tokenizer

At line 43 in tiktokenizer/src/pages/index.ts, you will find the following code:

const tokenizer = useQuery({ queryKey: [model], queryFn: ({ queryKey: [model] }) => createTokenizer(model!), }); const tokens = tokenizer.data?.tokenize(inputText);

useQuery is imported as shown below:

import { useQuery } from "@tanstack/react-query";

createTokenizer function is imported as shown below

import { createTokenizer } from "~/models/tokenizer";

createTokenizer function

In tiktokenizer/src/models/tokenizer.ts, you will find the following code at line 122:

export async function createTokenizer(name: string): Promise<Tokenizer> { console.log("createTokenizer", name); const oaiEncoding = oaiEncodings.safeParse(name); if (oaiEncoding.success) { console.log("oaiEncoding", oaiEncoding.data); return new TiktokenTokenizer(oaiEncoding.data); } const oaiModel = oaiModels.safeParse(name); if (oaiModel.success) { console.log("oaiModel", oaiModel.data); return new TiktokenTokenizer(oaiModel.data); } const ossModel = openSourceModels.safeParse(name); if (ossModel.success) { console.log("loading tokenizer", ossModel.data); const tokenizer = await OpenSourceTokenizer.load(ossModel.data); console.log("loaded tokenizer", name); return new OpenSourceTokenizer(tokenizer, name); } throw new Error("Invalid model or encoding"); }

oaiEncodings, oaiModels, openSourceModels are imported as shown below

import { oaiEncodings, oaiModels, openSourceModels } from ".";

So this function either returns:

-

TiktokenTokenizer

-

OpenSourceTokenizer

You will find more information about oaiEncodings, oaiModels, openSourceModels in tiktokenizer/src/models/tokenizer.ts

You will learn more about TiktokenTokenizer and OpenSourceTokenizer in next article.

About me:

Hey, my name is Ramu Narasinga. I study codebase architecture in large open-source projects.

Email: ramu.narasinga@gmail.com

Want to learn from open-source code? Solve challenges inspired by open-source projects.